If you aren’t careful you might select a less-than-optimum option

Often at times, we want to test multiple variants against a control at the same time. There are many reasons for this?—?sometimes we don’t want to wait to run each variant against a control in series, sometimes we’re indecisive, sometimes we don’t want to create another ticket for a future sprint (I don’t judge). Whatever the reason is?—?here we are, with multiple variants running at once. At least we’re not running an MVT. (Joking aside?—?there are some justifiable reasons to run MVTs but I’d only do so after running a series of split tests. The up-front work to set up an MVT is worth the trouble in my opinion. Don’t @ me.)

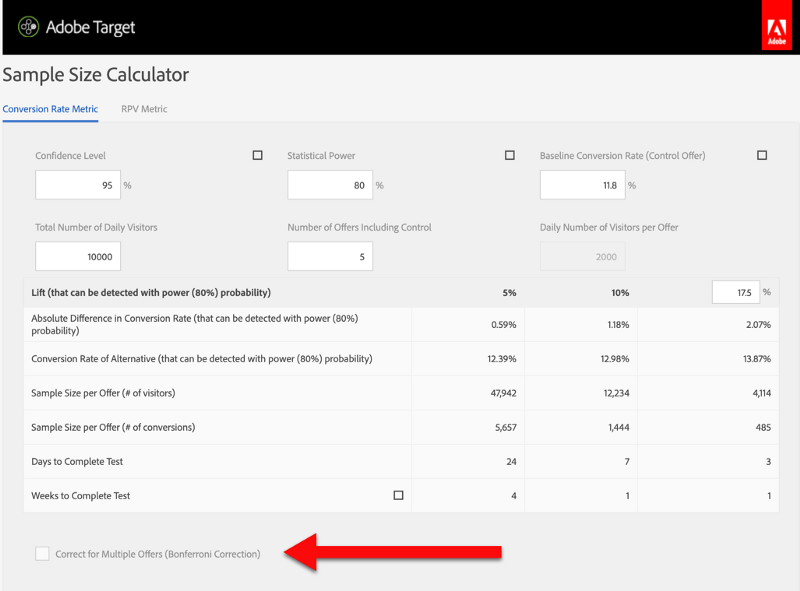

One common mistake is not to account for multiple comparisons. Simply put, the idea is that if you dig hard enough, you’ll eventually find something that’s statistically significant?—?which is most likely a false positive. A common procedure to control for multiple comparisons is to use something like a Bonferroni correction (remember kids, Wikipedia is not a reliable reference). This correction, in essence, decreases the alpha of your test?—?i.e., making it harder for a variant to be determined as different by making the threshold harder to reach. For those familiar with Adobe Target, you can see an option to correct for this on their sample size calculator below (I personally don’t love that it’s a bit hard to see.)

However, a more common mistake is to pick the variant that produces the highest lift without checking if it’s statistically different from the other variants. Unless you check the difference between the variants you risk actually selecting a less performant variant!

What other gotchas do you watch out for when running multiple variants? I’d love to hear them!

Connect with Experimenters from around the world

We’ll highlight our latest members throughout our site, shout them out on LinkedIn, and for those who are interested, include them in an upcoming profile feature on our site.

- Don’t rely on macro goals with Gursimran (Simar) Gujral - July 26, 2024

- Navigating Experimentation at Startups with Avishek Basu Mallick - July 18, 2024

- People are the biggest challenge featuring Michael St Laurent - July 13, 2024